We consider the scenario of view synthesis via depth-image based rendering in multi-view imaging. We formulate a resource allocation problem of jointly assigning optimal number of bits to compressed texture and depth images such that the maximum distortion of a synthesized view in a continuum of viewpoints between two encoded reference views is minimized for a given bit budget. We construct simple yet accurate image models for optimization, where pixels at similar depths are modeled by first-order Gaussian auto-regressive processes. Based on our models, we then derive an optimization procedure that solves numerically the formulated min-max problem using Lagrangian relaxation. Our simulation results show that relative to heuristic quantization that allocates equal rates to every encoded image, our optimization provides a significant gain (up to 2dB) in quality of the synthesized views for the same overall bit rate.

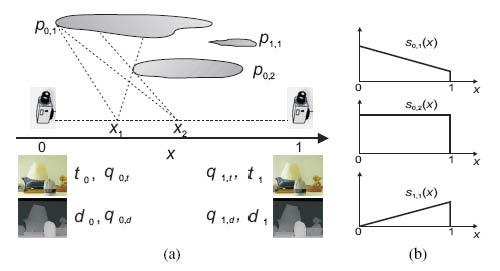

Figure 1. (a) The coding problem setup. The shown examples of the captured texture and depth images are taken from the data set Midd2. (b) The sizes of the visible portions of 3 patches are approximated by linear functions of the synthesis viewpoint location x.