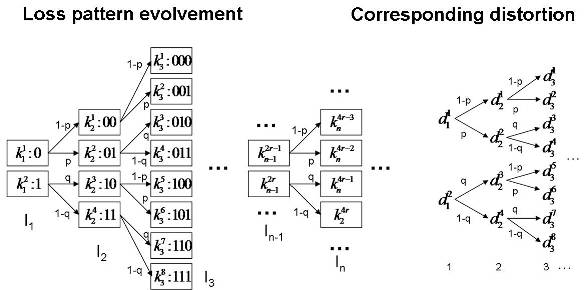

This work addresses the problem of distortion modeling for video transmission over burst-loss channels characterized by a finite-state Markov chain. Based on a detailed analysis of the error propagation and the bursty losses, a distortion trellis model is proposed, enabling us to estimate at the both the frame level and sequence level the expected mean-square error (MSE) distortion caused by Markov-model burst packet losses. The model takes into account the temporal dependencies induced by both the motion-compensated coding scheme and the Markov-model channel losses. The model is applicable to most block-based motion-compensated encoders, and most Markovmodel lossy channels as long as the loss pattern probabilities for that channel is computable. Based on the study of the decaying behavior of the error propagation, a sliding window algorithm is developed to perform the MSE estimation with low complexity. Simulation results show that the proposed models are accurate for all tested average loss rates and average burst lengths. Based on the experimental results, the proposed techniques are used to analyze the impact of factors such as average burst length on the average decoded video quality. The proposed model is further extended to a more general form, and the modeled distortion is compared with the data produced from realistic networks loss traces. The experiment results demonstrate that the proposed model is also accurate in estimating the expected distortion for video transmission in real networks.

Figure 1. Trellises describing the evolution of loss patterns (left) and video distortion values (right), as employed in our modeling framework.